SDK 3.4 Release Information

2021-01-15

Augmented Reality API

Both the core IndoorAtlas positioning SDK and Augmented Reality frameworks (ARCore / ARKit) are tracking the movement of a mobile device. However, they are focused on different things

- ARCore and ARKit track the motion accurately in a local metric coordinate system. They can tell you very accurately that the phone moved 3 centimeters to the left and 4 centimeters up, compared to where it was a 0.3 seconds ago, but the starting position is arbitrary. They do not tell you if the phone is in Finland or in the US.

- IndoorAtlas technology determines the global position and orientation of the device. The SDK tells you your floor level and the position within a floor plan with meter-level accuracy (depending on the case). However, up until now, the location updates approximately once a second and does not follow centimeter-level changes

The new IndoorAtlas AR fusion API merges these two sources of information: You can both track centimeter level changes and know where the device is, approximately, in the global coordinates. This does not mean that we have global centimeter-level accuracy, but it allows you to conveniently use approximately geo-referenced content so that it looks reasonable in the AR world. It also works indoors where using the compass directly for orientation is challenging, due to calibration issues and local geomagnetic features - the same things we embrace in geomagnetic positioning.

When available, the platform-provided AR motion tracking (VIO) is deeply integrated into the IndoorAtlas sensor fusion framework, which allows us to increase the robustness and accuracy of the sensor fusion algorithm. On the other hand, when VIO is not available, for example, the phone is placed in a bag or pocket, which occludes the camera and disables AR, tracking can still continue with IndoorAtlas background PDR (added in SDK 3.2). If the AR session is later resumed, AR way-finding can almost instantly continue from an accurate location.

API documention for the new AR fusion API can be found

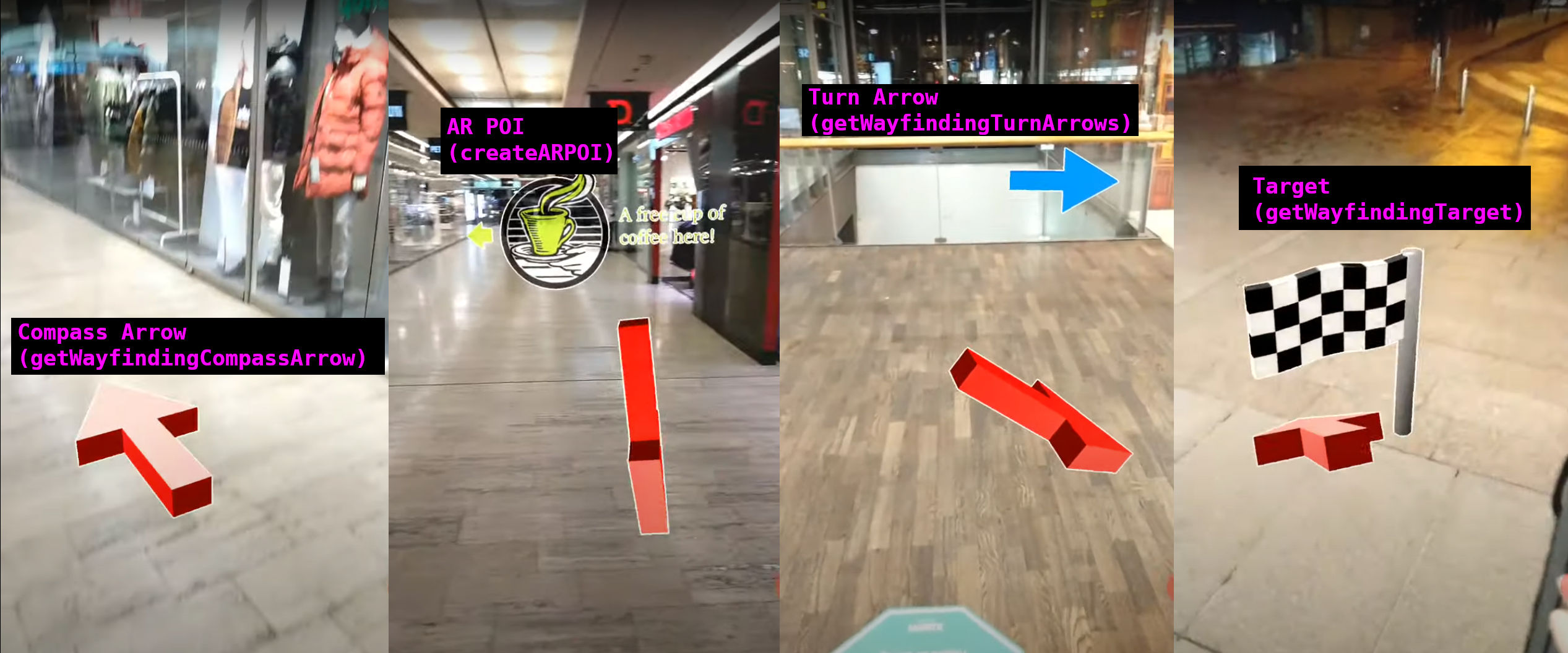

The following image also illustrates the main elements in the API using in screenshots from the above video

AR API elements in the demo video

To use the new AR features, please contact IndoorAtlas sales & support.

Other new features

The SDK 3.4 also includes the following new features

-

Updated Wayfinding API In addition to requesting wayfinding routes from current location to some destination B, the routes can now be requested also between arbitrary points A and B.

-

Time-based location updates for iOS This feature, previously included only in the Android SDK, has been now added also to the iOS SDK. Time-based filtering is useful for example to allow location updates also when standing still.